Magnitude Scale To Measure Brightness

Astronomers name individual stars like Vega, Betelgeuse, and Sirius. However, they need a way to describe their brightness. Read on to learn more about the magnitude scale.

Besides naming individual stars, astronomers need a way to describe their brightness. Astronomers measure the brightness of stars using the magnitude scale. This measurement system first appeared in the writings of Ptolemy about AD 140. However, other astronomers attribute an earlier system to the Greek astronomer Hipparchus. Hipparchus compiled the first known star catalog. Some believe that he may have used the magnitude system in his catalog.

Magnitude Scale

It is fun to know the names of the stars in the sky, but remember these stars contain atmospheres and elements unlike anything here on Earth. Ptolemy used the magnitude system in his catalog, and successive generation of astronomers have continued to use the system. How did they decide to divide the stars into scales?

The ancient astronomers divided the stars into six classes. The brightest were called first-magnitude stars and those that were fainter, second magnitude. The scaled continued downs to sixth magnitude stars, the faintest visible to the human eye. As a result, the larger the magnitude number, the fainter the star. This makes sense if you think of the bright stars as first-class stars and the faintest stars as sixth-class stars.

Credit: Las Cumbres Observatory

Ancient Astronomers

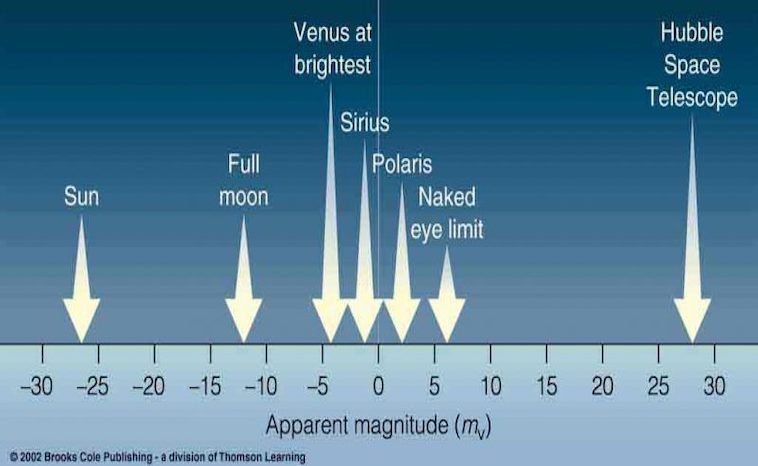

Ancient astronomers could only estimate magnitudes. However, modern astronomers can measure the brightness of stars to high precision. Consequently, they have made adjustments to the scale of sizes. Instead of saying that the star known by the name Chort (Theta Leonis) is the third magnitude, they say it is magnitude 3.34. Accurate measurements show that some stars have a brighter size than 1.0. For example, Vega (alph Lyrae) is so bright that its magnitude, .004, is almost zero. Some stars generate negative numbers on the scale due to the brightness. On this scale, Sirius, the brightest star in the sky has a magnitude of -1.47.

Modern astronomers have had to extend the faint end of the magnitude scale as well. The faintest stars you can see with your unaided eyes are about the sixth magnitude. Meanwhile, if you use a telescope, you may see stars that appear much fainter. Astronomers must use magnitude numbers larger than 6 to describe these faint stars.

Credit: Brooks Publishing

Credit: Brooks PublishingApparent Visual Magnitudes

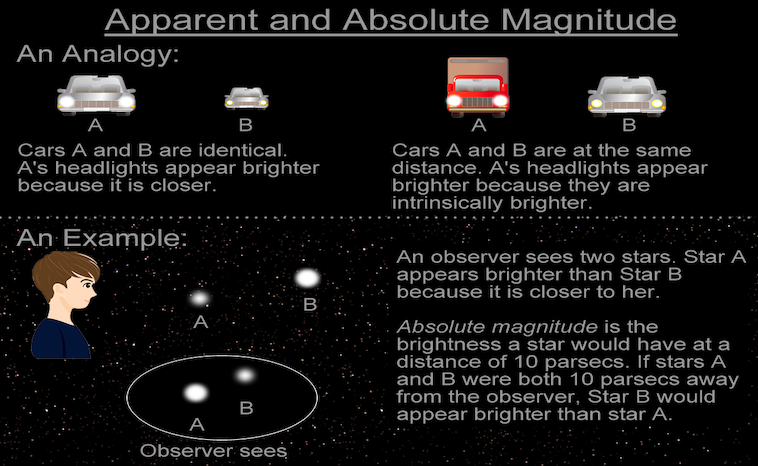

Numbers used to describe how stars look to human eyes observing from Earth are named apparent visual magnitudes. Some stars emit large amounts of infrared or ultraviolet lights. Human eyes cannot see those types of radiation.

Consequently, they do not appear on the apparent visual magnitude. When writing for apparent visual magnitude, you would use a lower case letter m. Adding a subscript, V stands for “visual” and reminds you that only visible light is included on the scale. This scale does not take into account the distance to the stars. Very distant stars look fainter, and nearby stars seem brighter. Apparent visual magnitude ignores the effect of distance and tells you only how bright the tar looks as soon from Earth.

Determining the brightness of a star has been around since the days of Hipparchus. So tonight when you look into the sky and see bright stars and faint stars you now know that there exists a scale to measure them.